Sometimes I feel like writing or talking on social media. But deciding which language to use is tricky. Russian guys don’t know Spanish. Many Panamanians do not speak English fluently and certainly do not know Russian.

Everything I watch and read is in English. But I rarely speak it. All my notes are also in English.

After ten years in the tropics, I began to forget some Russian words. Whether it is worth remembering – I do not know. Better to learn to speak correct Spanish. I speak often as a dockman with zero benevolence. My woman suggested at the beginning of conversations with new people to mention that I am Russian and frankness is not considered rudeness in our country.

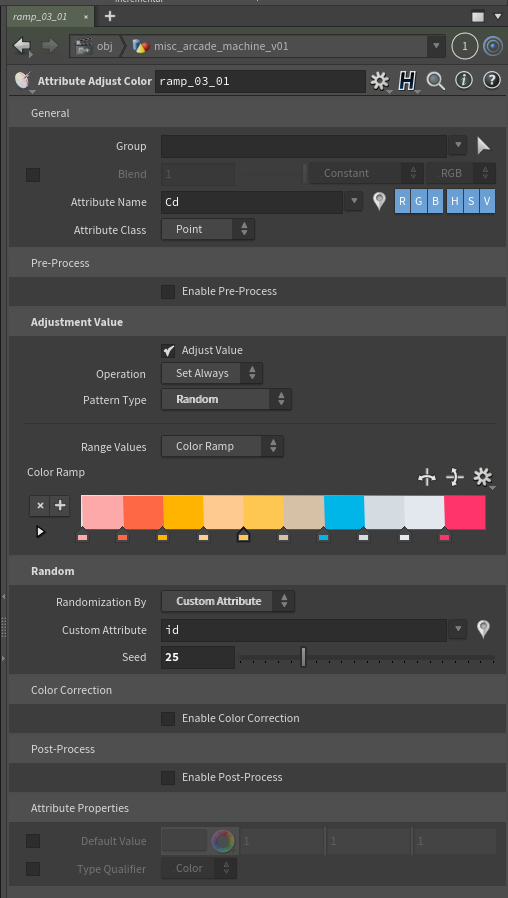

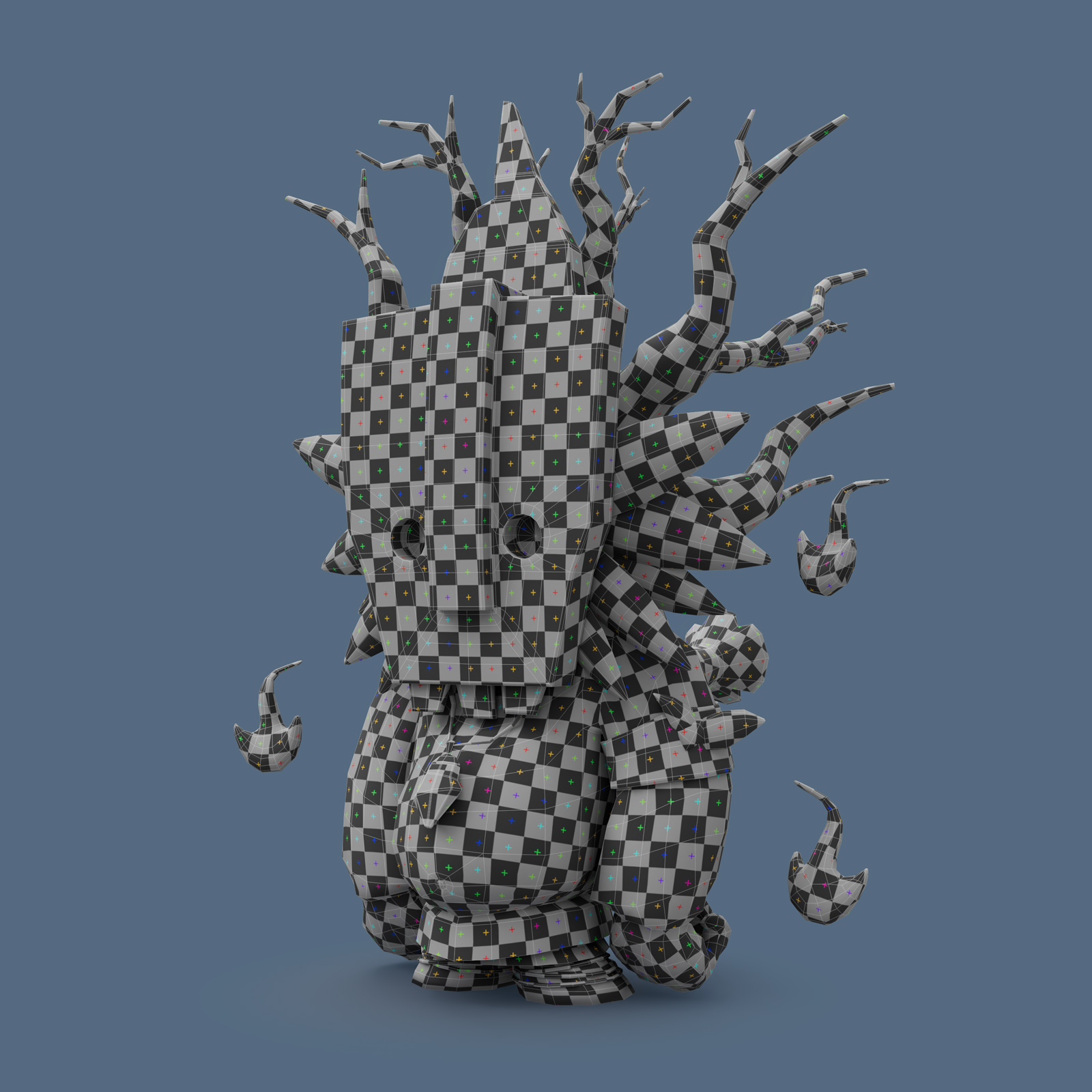

In the video suggested by YouTube, a guy makes a dialog icon in five minutes in Sketch:

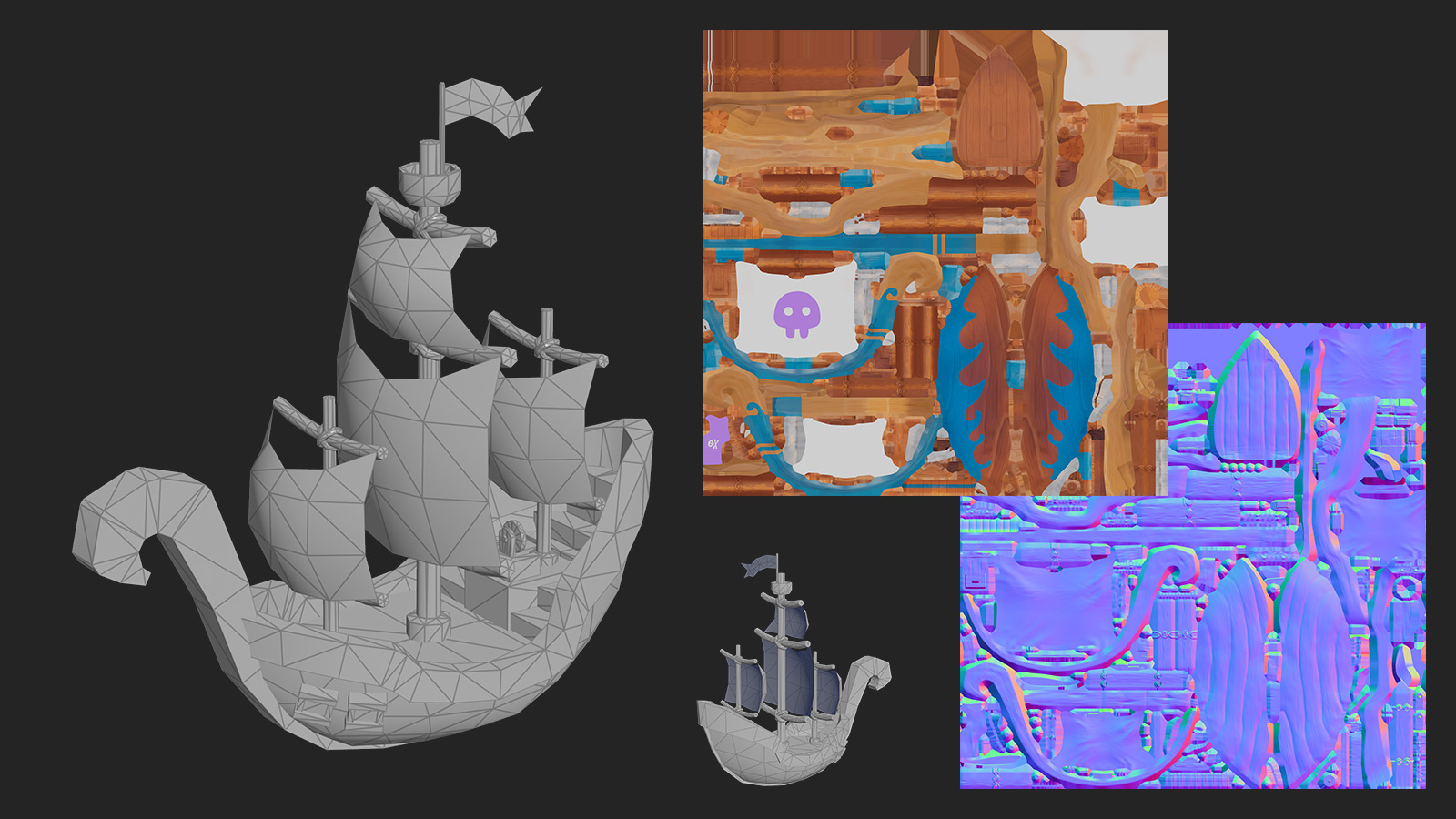

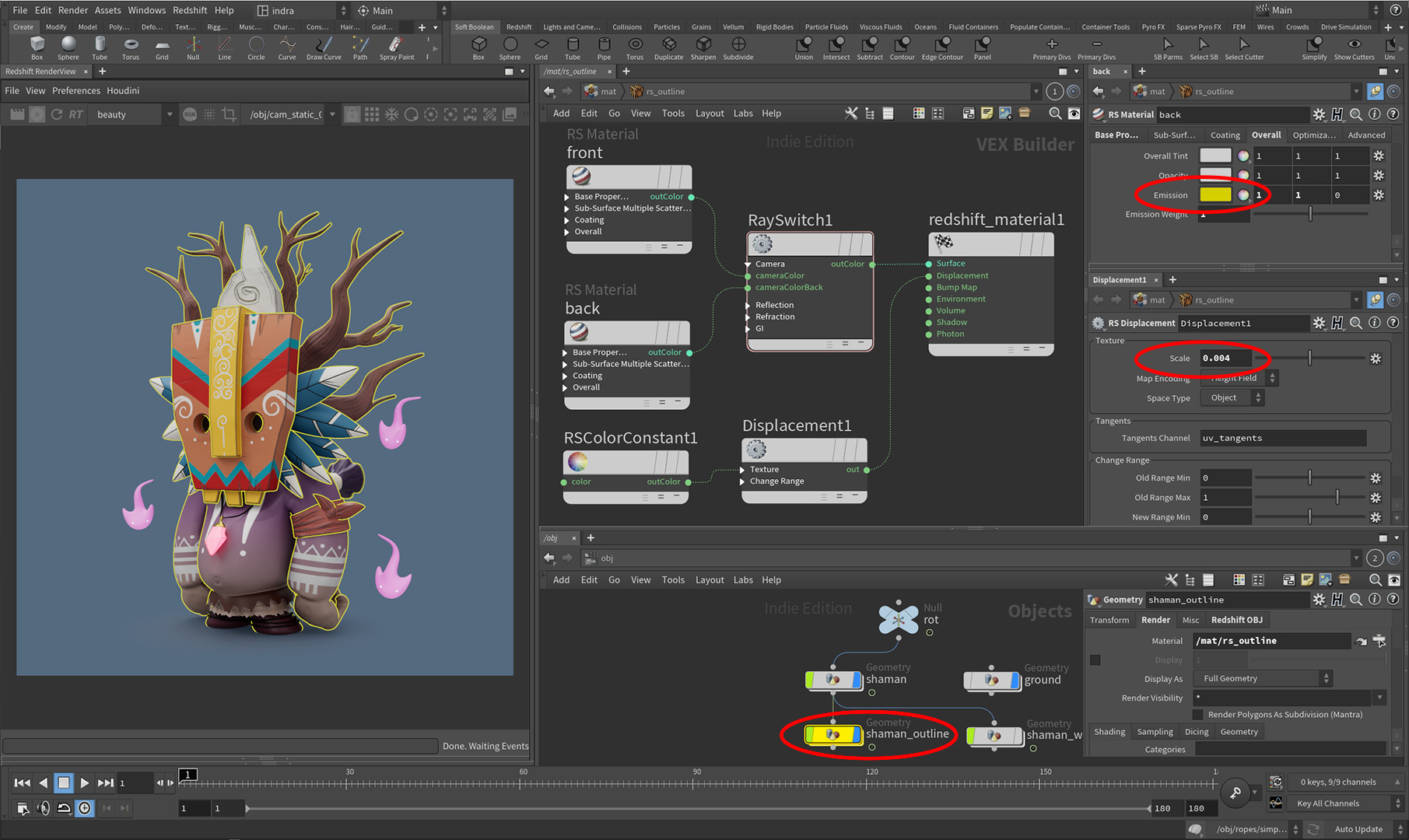

I liked the thumbnail and I made it in 3D.

At the same time, I practiced gluing panoramas with a high dynamic range. They are used for lighting most scenes in 3D. Just before the start of the quarantine, I took a few photos in the office. Unfortunately, the only program that glues them well (PTGui 12) costs $300. The demo works without restrictions, but it fills everything with watermarks. In Photoshop, however, they can be erased even in 32 bits. I guess it’s ok for just a fun project.

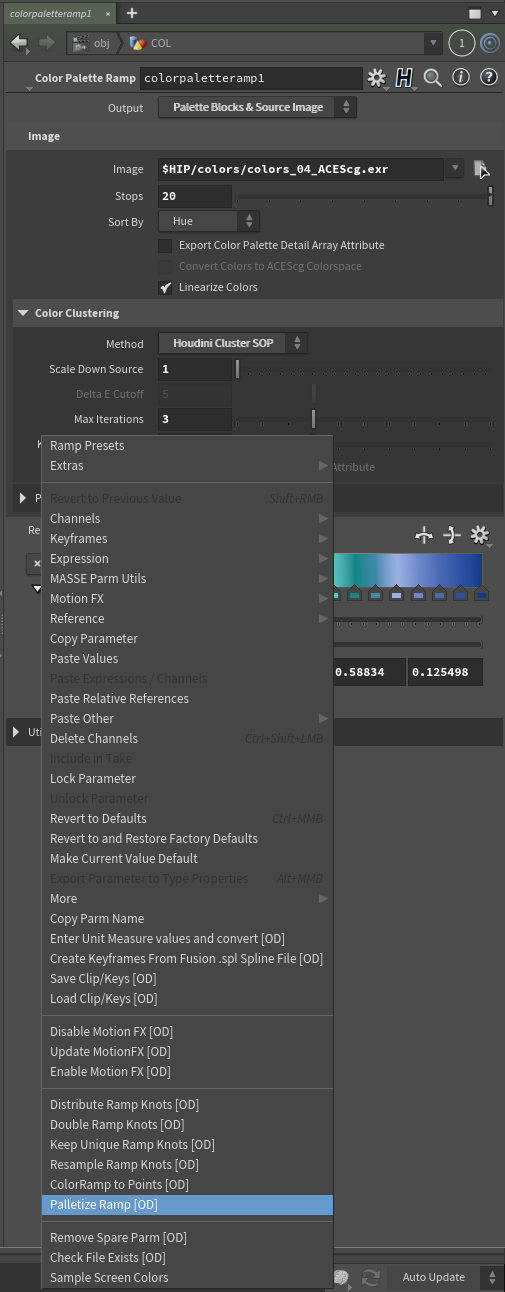

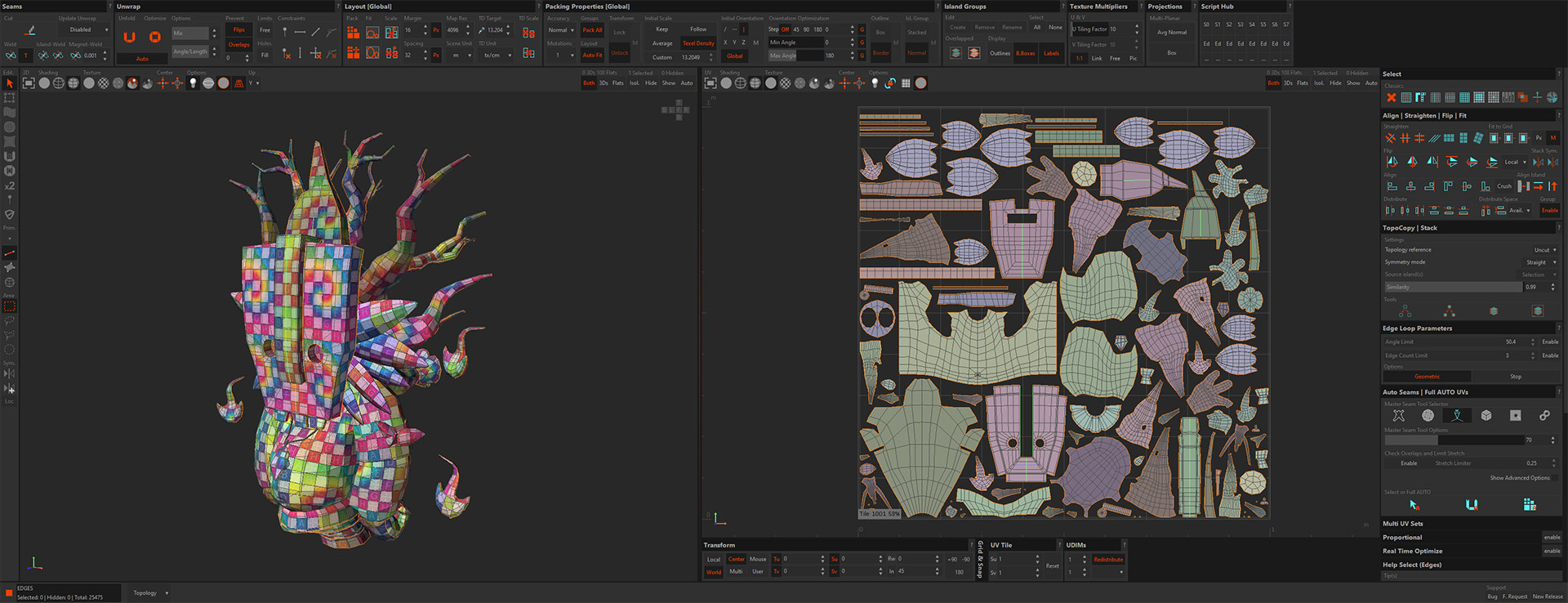

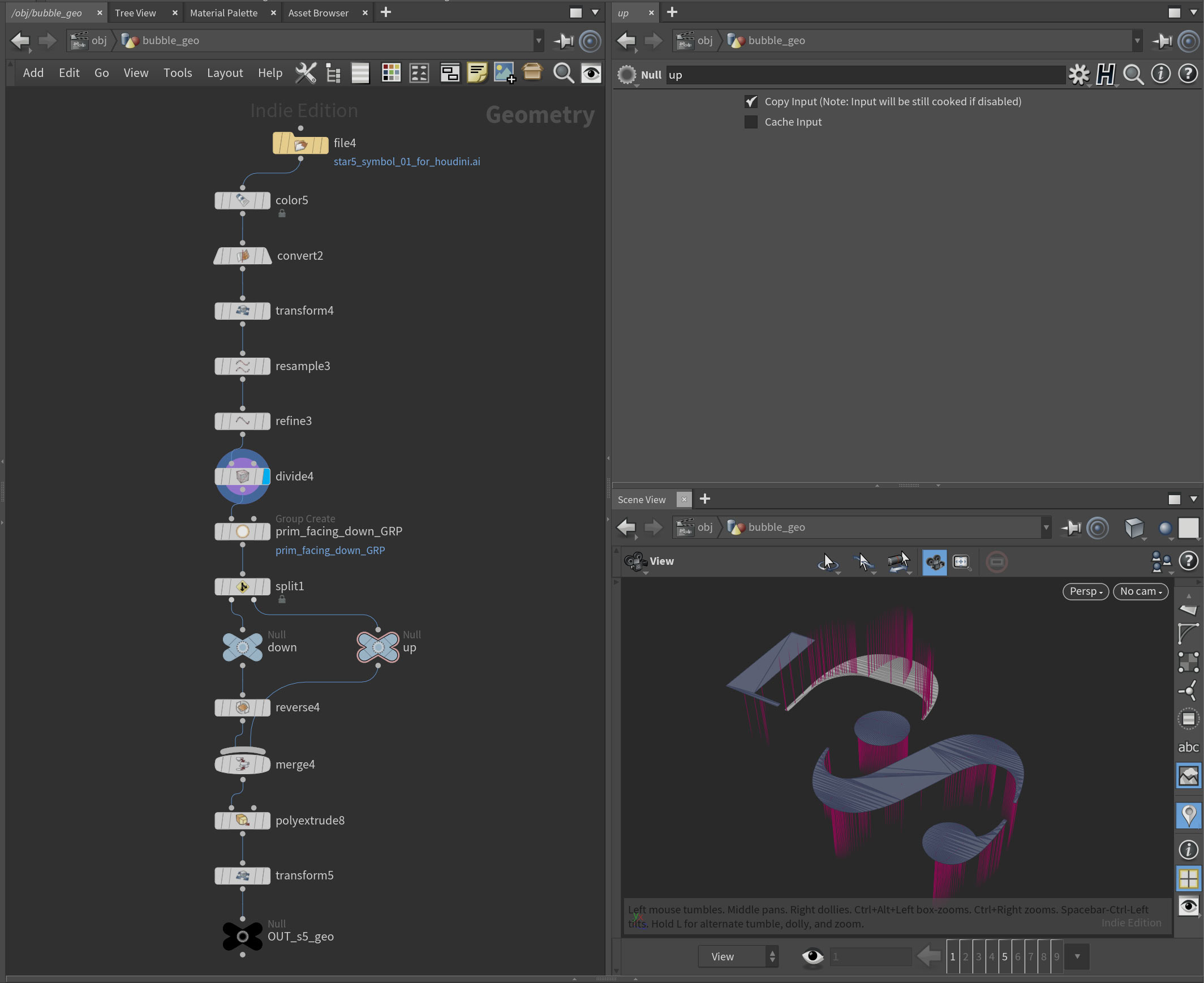

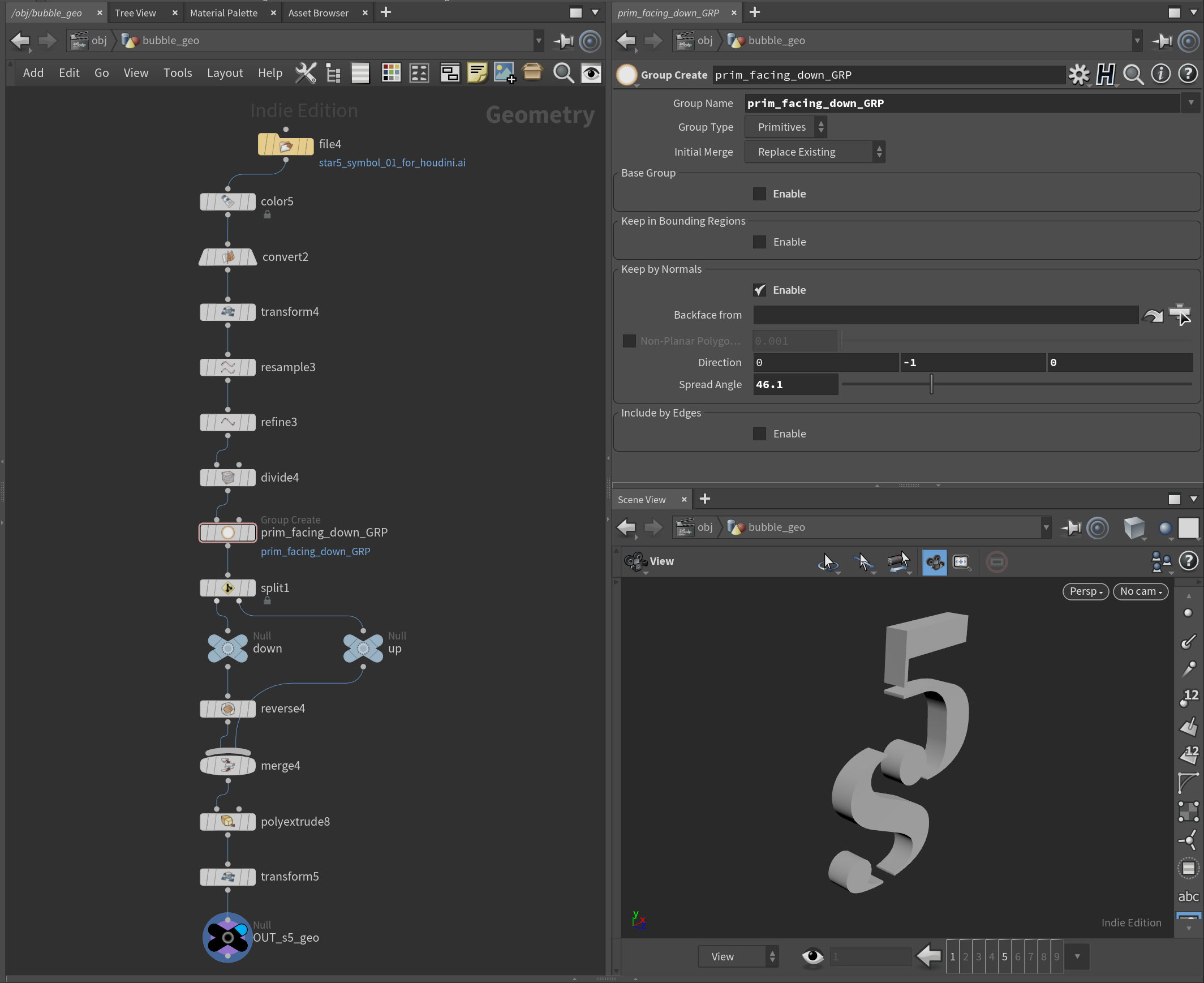

I also figured out a little bit more about the differences in normals between points and primitives in houdini.

Sometimes I need to get vector graphics from illustrator and extrude it in houdini.

Constant problem is that some parts can be flipped and will extrude in different direction. It’s happening because direction of paths when they were made in illustrator is different.

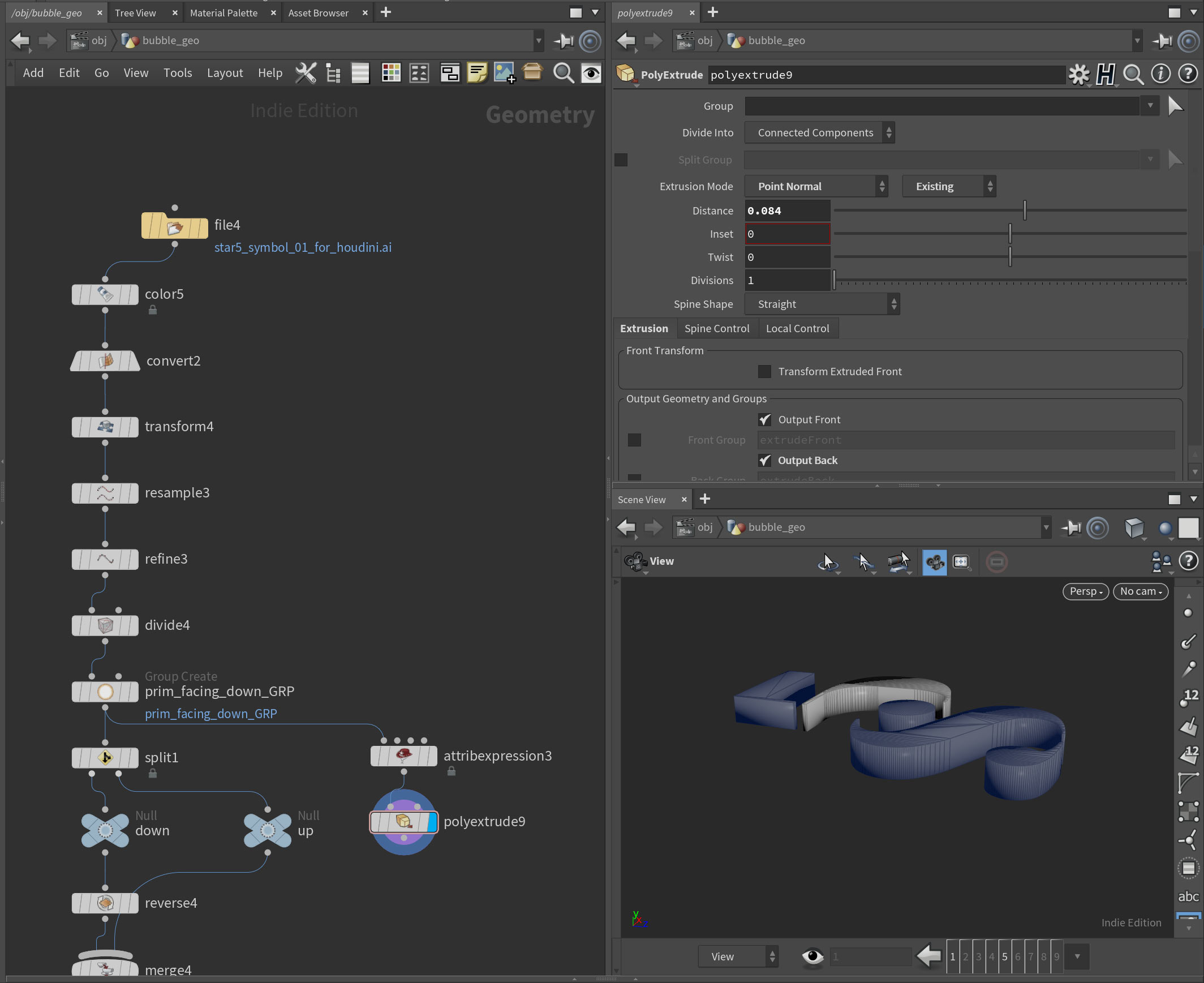

So I thought that I can add Attribute Expression node, set it to points, attribute to normal, set VEXpression from dropdown to Constant Value and write 0, 1, 0 in Contact Value to get normals pointing up. But it will not change the primitives normals. Because primitive normals are not actually an attribute. They are derived information that is calculated based upon the vertices that make up the primitive. As such, they cannot be modified. You can still use PolyExtrude, set it to point normal and extrusion mode to Existing. But you will end up with geo where some primitives normals will look “out” and others will look “in”. I don’t know if there is an easy fix for that.

So after you bringing you paths from illustrator you need first to separate primitives that are flipped. You can do this by using simple group node. Use only “keep by normals”, set the direction to 0,-1,0 and lower the spread angle. There is also Labs Split Primitives by Normal node that does exactly this but with less clicks.

Then use a reverse node. It WILL reverse vertex order in the primitives.

Also takes are amazing. You can create different version of scene and in render node save an image with take name like this:

$HIP/render/r01/bubbles.static.s5.`chsop(“take”)`.$F2.tif

So `chsop(“take”)` part is responsible for take name. And in my case the output names will be:

bubbles.static.s5.blue_bubbles.01.tif

bubbles.static.s5.orange_bubbles.01.tif